In 2018, Sam Cole, a reporter at Motherboard, discovered a new and disturbing corner of the internet. A Reddit user by the name of “deepfakes” was posting nonconsensual fake porn videos using an AI algorithm to swap celebrities’ faces into real porn. Cole sounded the alarm on the phenomenon, right as the technology was about to explode. A year later, deepfake porn had spread far beyond Reddit, with easily accessible apps that could “strip” clothes off any woman photographed.

Since then deepfakes have had a bad rap, and rightly so. The vast majority of them are still used for fake pornography. A female investigative journalist was severely harassed and temporarily silenced by such activity, and more recently, a female poet and novelist was frightened and shamed. There’s also the risk that political deepfakes will generate convincing fake news that could wreak havoc in unstable political environments.

But as the algorithms for manipulating and synthesizing media have grown more powerful, they’ve also given rise to positive applications–as well as some that are humorous or mundane. Here is a roundup of some of our favorites in a rough chronological order, and why we think they’re a sign of what’s to come.

Whistleblower shielding

TEUS MEDIA

In June, Welcome to Chechyna, an investigative film about the persecution of LGBTQ individuals in the Russian republic, became the first documentary to use deepfakes to protect its subjects’ identities. The activists fighting the persecution, who served as the main characters of the story, lived in hiding to avoid being tortured or killed. After exploring many methods to conceal their identities, director David France settled on giving them deepfake “covers.” He asked other LGBTQ activists from around the world to lend their faces, which were then grafted onto the faces of the people in his film. The technique allowed France to preserve the integrity of his subjects’ facial expressions and thus their pain, fear, and humanity. In total the film shielded 23 individuals, pioneering a new form of whistleblower protection.

Revisionist history

PANETTA AND BURGUND

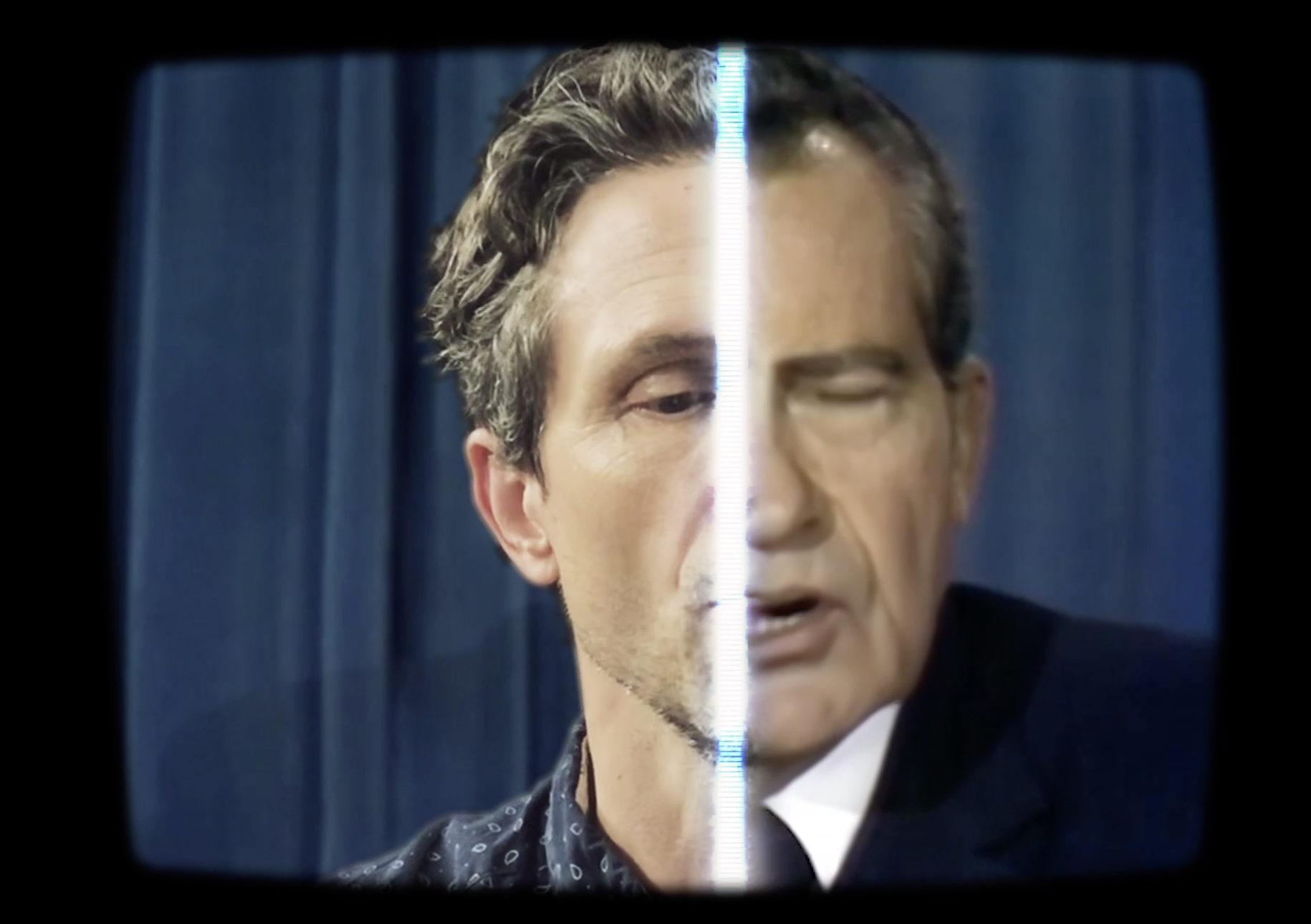

In July, two MIT researchers, Francesca Panetta and Halsey Burgund, released a project to create an alternative history of the 1969 Apollo moon landing. Called In Event of Moon Disaster, it uses the speech that President Richard Nixon would have delivered had the momentous occasion not gone according to plan. The researchers partnered with two separate companies for deepfake audio and video, and hired an actor to provide the “base” performance. They then ran his voice and face through the two types of software, and stitched them together into a final deepfake Nixon.

While this project demonstrates how deepfakes could create powerful alternative histories, another one hints at how deepfakes could bring real history to life. In February, Time magazine re-created Martin Luther King Jr.’s March on Washington for virtual reality to immerse viewers in the scene. The project didn’t use deepfake technology, but Chinese tech giant Tencent later cited it in a white paper about its plans for AI, saying deepfakes could be used for similar purposes in the future.

Memes

MS TECH | NEURIPS (TRAINING SET); HAO (COURTESY)

In late summer, the memersphere got its hands on simple-to-make deepfakes and unleashed the results into the digital universe. One viral meme in particular, called “Baka Mitai” (pictured above), quickly surged as people learned to use the technology to create their own versions. The specific algorithm powering the madness came from a 2019 research paper that allows a user to animate a photo of one person’s face with a video of someone else’s. The effect isn’t high quality by any stretch of the imagination, but it sure produces quality fun. The phenomenon is not entirely surprising; play and parody have been a driving force in the popularization of deepfakes and other media manipulation tools. It’s why some experts emphasize the need for guardrails to prevent satire from blurring into abuse.