At its core, GPT-2 is a powerful prediction engine. It learned to grasp the structure of the English language by looking at billions of examples of words, sentences, and paragraphs, scraped from the corners of the internet. With that structure, it could then manipulate words into new sentences by statistically predicting the order in which they should appear.

So researchers at OpenAI decided to swap the words for pixels and train the same algorithm on images in ImageNet, the most popular image bank for deep learning. Because the algorithm was designed to work with one-dimensional data (i.e., strings of text), they unfurled the images into a single sequence of pixels. They found that the new model, named iGPT, was still able to grasp the two-dimensional structures of the visual world. Given the sequence of pixels for the first half of an image, it could predict the second half in ways that a human would deem sensible.

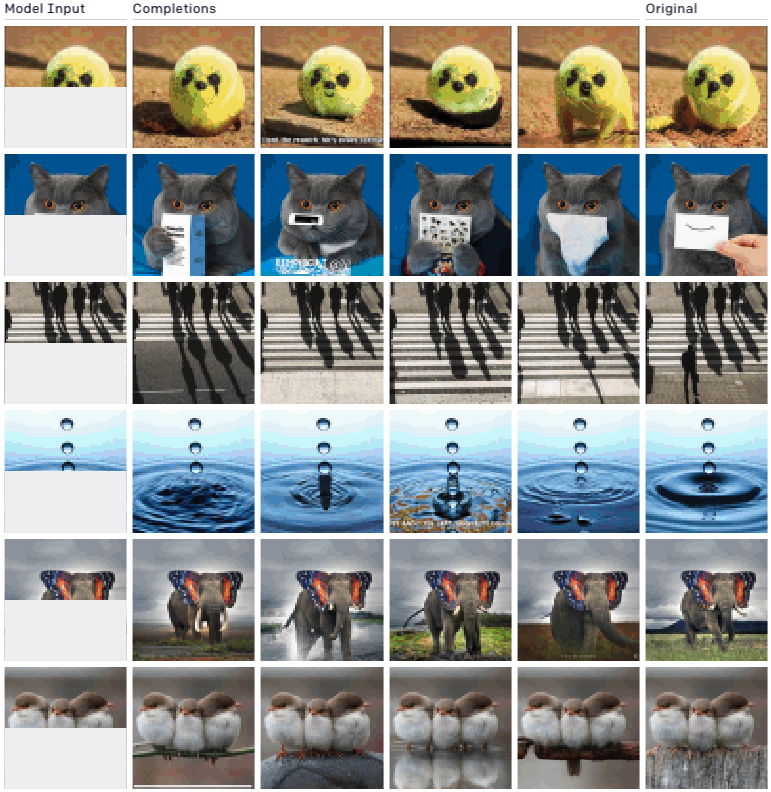

Below, you can see a few examples. The left-most column is the input, the right-most column is the original, and the middle columns are iGPT’s predicted completions. (See more examples here.)

OPENAI

The results are startlingly impressive and demonstrate a new path for using unsupervised learning, which trains on unlabeled data, in the development of computer vision systems. While early computer vision systems in the mid-2000s trialed such techniques before, they fell out of favor as supervised learning, which uses labeled data, proved far more successful. The benefit of unsupervised learning, however, is that it allows an AI system to learn about the world without a human filter, and significantly reduces the manual labor of labeling data.

The fact that iGPT uses the same algorithm as GPT-2 also shows its promising adaptability. This is in line with OpenAI’s ultimate ambition to achieve more generalizable machine intelligence.

At the same time, the method presents a concerning new way to create deepfake images. Generative adversarial networks, the most common category of algorithms used to create deepfakes in the past, must be trained on highly curated data. If you want to get a GAN to generate a face, for example, its training data should only include faces. iGPT, by contrast, simply learns enough of the structure of the visual world across millions and billions of examples to spit out images that could feasibly exist within it. While training the model is still computationally expensive, offering a natural barrier to its access, that may not be the case for long.

OpenAI did not grant an interview request, but in an internal policy team meeting that MIT Technology Review attended last year, its policy director, Jack Clark, mused about the future risks of GPT-style generation, including what would happen if it were applied to images. “Video is coming,” he said, projecting where he saw the field’s research trajectory going. “In probably five years, you’ll have conditional video generation over a five- to 10-second horizon.” He then proceeded to describe what he imagined: you’d feed in a photo of a politician and an explosion next to them, and it would generate a likely output of that politician being killed.

Update: This article has been updated to remove the name of the politician in the hypothetical scenario described at the end.